最佳答案

O (N 对数 N)复杂度-类似于线性?

所以我想我会因为问了这样一个琐碎的问题而被埋葬,但是我对一些事情有点困惑。

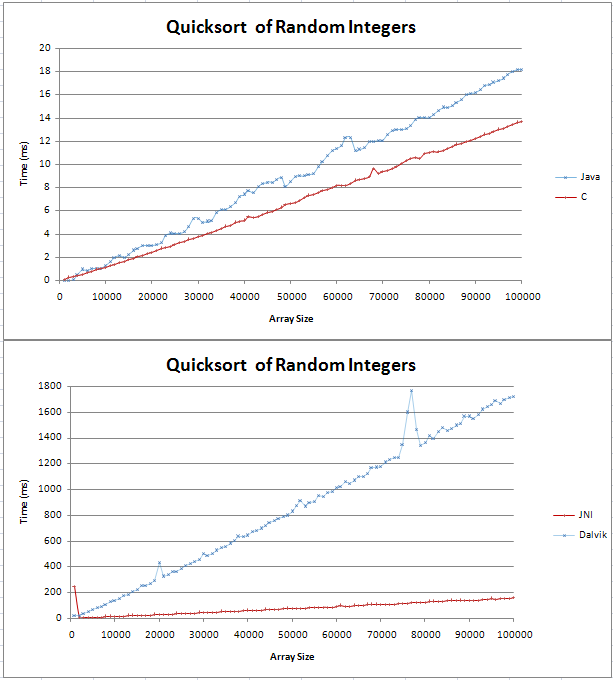

我用 Java 和 C 实现了快速排序,并且做了一些基本的比较。图表显示为两条直线,C 比 Java 快4毫秒,超过100,000个随机整数。

我的测试代码可以在这里找到;

我不确定(n log n)线会是什么样子,但我认为它不会是直的。我只是想检查这是否是预期的结果,并且不应该尝试在代码中查找错误。

我把公式输入 Excel,对于基数10,它似乎是一条直线,在开始时有一个扭结。这是因为 log (n)和 log (n + 1)之间的差值是线性增加的吗?

谢谢,

盖文